Curriculum Vitae | Google Scholar | Linkedin

About

My research aims to make games and Augmented/Virtual Reality more believable by drawing on ideas in computational physics, psychophysics, graphics and acoustics. I like working on research problems whose results accrue to functioning real-world systems. Over the past decade I have advanced physical modeling techniques for real-time sound synthesis and propagation. These are being used in shipping interactive spatial audio systems and games, experienced by millions of people worldwide.

Bio

Nikunj leads research projects at Microsoft Research's Redmond labs in the areas of spatial audio, computational acoustics and computer graphics for games and AR/VR. As senior researcher he's tasked with conceiving new research directions, working on the technical problems with collaborators, mentoring interns, publishing findings, giving talks, and engaging closely with engineering groups to translate the ideas into real-world impact. Over the last decade he has led project Triton, a first-of-its-kind wave acoustics system that is now in production use in multiple major Microsoft products such as Gears of War & Windows 10. Triton is currently in the process of being opened up for external use as part of Project Acoustics. Nikunj has published and given talks at top academic and industrial venues across disciplines: ACM SIGGRAPH, Audio Engineering Society, Acoustical Society of America, and Game Developers Conference and served on the SIGGRAPH papers committee. Before Microsoft, he did his PhD studies at UNC Chapel Hill, where his work helped initiate sound as a new research direction. His entire thesis code was licensed by Microsoft.

Highlight

Triton: Spatial Wave Acoustics for games and AR/VR

Shipped products

- Triton is the engine inside Microsoft's Project Acoustics, currently in "designer preview" - if you're interested, sign up!

- AltspaceVR, May 2018

- Sea of Thieves, RARE Studio, Mar 2018

- Windows 10 Mixed Reality Portal, Nov 2017

- Gears of War 4, The Coalition Studio, Oct 2016

Triton is the first demonstration that high-quality wave acoustics can be made feasible for production games and Augmented/Virtual Reality. It accurately models sound wave propagation effects (including diffraction) on full 3D game maps for moving sources and listener. A key novel aspect is providing the sound designer intuitive controls to dynamically tweak the physical acoustics. This results in believable environmental effects that transition smoothly as sounds and player move through the world, such as scene-dependent reverberation, smooth occlusion, obstruction and portaling effects.

Projects

Adaptive Sampling for Sound Propagation

Chakravarty R. Alla Chaitanya, John M. Snyder, Keith Godin, Derek Nowrouzezahrai, Nikunj Raghuvanshi

IEEE Transactions on Visualization and Computer Graphics (IEEE VR 2019), 25(4), April 2019 (to appear)

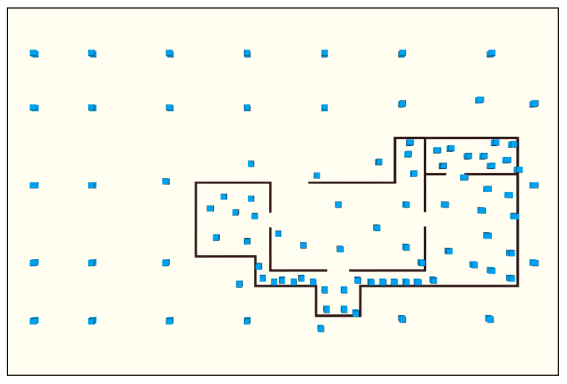

Precomputed techniques for sound and light need to decide on key "probe" locations where the propagating field is sampled and then carefully interpolate the result during gameplay. Most prior work employs uniform sampling for this purpose. However, narrow spaces such as corridors represent a big problem. One must choose between sampling finely and wasting a lot of samples in wide open regions, or risk missing an entire corridor in the sampling, which will cause audible issues at runtime. We present an adaptive sampling approach that resolves these issues by smoothly varying probe density based on a novel “local diameter” measure of the space surrounding any given point. To cope with the resulting unstructured sampling, we also propose a novel interpolator based on radial weights over geodesic shortest paths, that achieves smooth acoustic effects that respect scene boundaries, unlike existing visibility-based methods that can cause audible discontinuities on motion.

- [Paper (preprint)]

-

[Bibtex]

@article{Chaitanya:2019:AdaptiveSampling,

title={Adaptive Sampling for Sound Propagation},

author={Chaitanya, Chakravarty R. A. and Snyder, John M. and Godin, Keith and Nowrouzezahrai, Derek and Raghuvanshi, Nikunj},

journal={IEEE Transactions on Visualization and Computer Graphics (Special Issue on IEEE Virtual Reality and 3D User Interfaces)},

volume = {25},

number={4},

month={4},

year={2019},

}

Ambient Sound Propagation

Zechen Zhang, Nikunj Raghuvanshi, John Snyder, Steve Marschner

ACM Transactions on Graphics (SIGGRAPH Asia 2018), 37(6), November 2018

Large extended sources, like waves on a beach or rain are commonly used in games and VR to provide an active, immersive ambience to scenes. The propagation of such sounds through 3D scenes is a daunting computational challenge making it common to use hacks based on point sources that can sound unconvincing. We discuss a novel incoherent source formulation for efficient wave-based precomputation on 3D scene geometry. Simulated directional loudness information is compressed using spherical harmonics, and rendered with a simple and efficient rendering algorithm. Overall, the system is light on RAM (~1MB per source) and CPU, enabling immersive spatial audio rendering of ambient sources for todays games and VR.

- [Paper]

-

[Bibtex]

@article{ Zhang:2018:Ambient,

title={Ambient Sound Propagation},

author={Zhang, Zechen and Raghuvanshi, Nikunj and Snyder, John and Marschner, Steve},

journal={ACM Trans. Graph.},

volume = {37},

number={6},

month={11},

article={184},

doi={https://doi.org/10.1145/3272127.3275100},

year={2018},

}

Parametric Directional Coding for Precomputed Sound Propagation

Nikunj Raghuvanshi and John Snyder, ACM Transactions on Graphics (SIGGRAPH), 37(4), August 2018

Prior wave approaches to precomputed ("baked") sound propagation didn't model directional acoustic effects from geometry, instead using simplifications like initial sound arriving at listener in line of sight direction, potentially through walls, and reverberation that arrives at the listener from all directions. While these approximations work reasonably when analyzing acoustics in a single room, today's game scenes have vast maps with many rooms and partially or fully outdoor areas. In this paper we presented the first system to efficiently precompute and render immersive spatial wave-acoustic effects, like diffracted sound arriving around doorways, directional reflections, and anisotropic reverberation within RAM and CPU footprints immediately practical for games and VR.

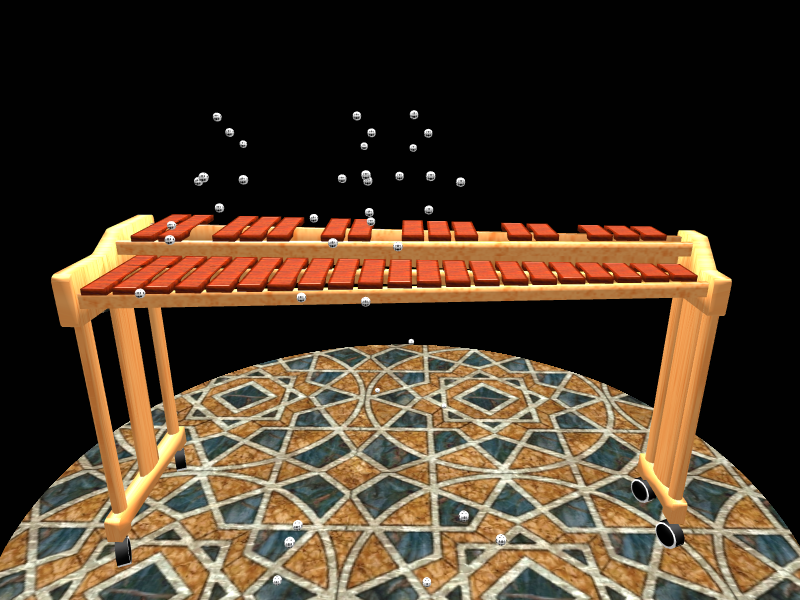

Aerophones in Flatland: Interactive Wave Simulation of Wind Instruments

Andrew Allen and Nikunj Raghuvanshi, ACM Transactions on Graphics (SIGGRAPH), 34(4), July 2015

This paper describes the first real-time technique to synthesize full-audible-bandwidth sounds for 2D virtual wind instruments. The user is presented with a sandbox interface where they can draw any bore shape and create tone holes, valves or mutes. The system is always online, synthesizing sounds from the drawn geometry as governed by wave physics. Our main contribution is an interactive wave solver that executes entirely on modern graphics cards with a novel numerical formulation supporting glitch-free online geometry modification.

Parametric Wave Field Coding for Precomputed Sound Propagation

Nikunj Raghuvanshi and John Snyder, ACM Transactions on Graphics (SIGGRAPH), 33(4), July 2014

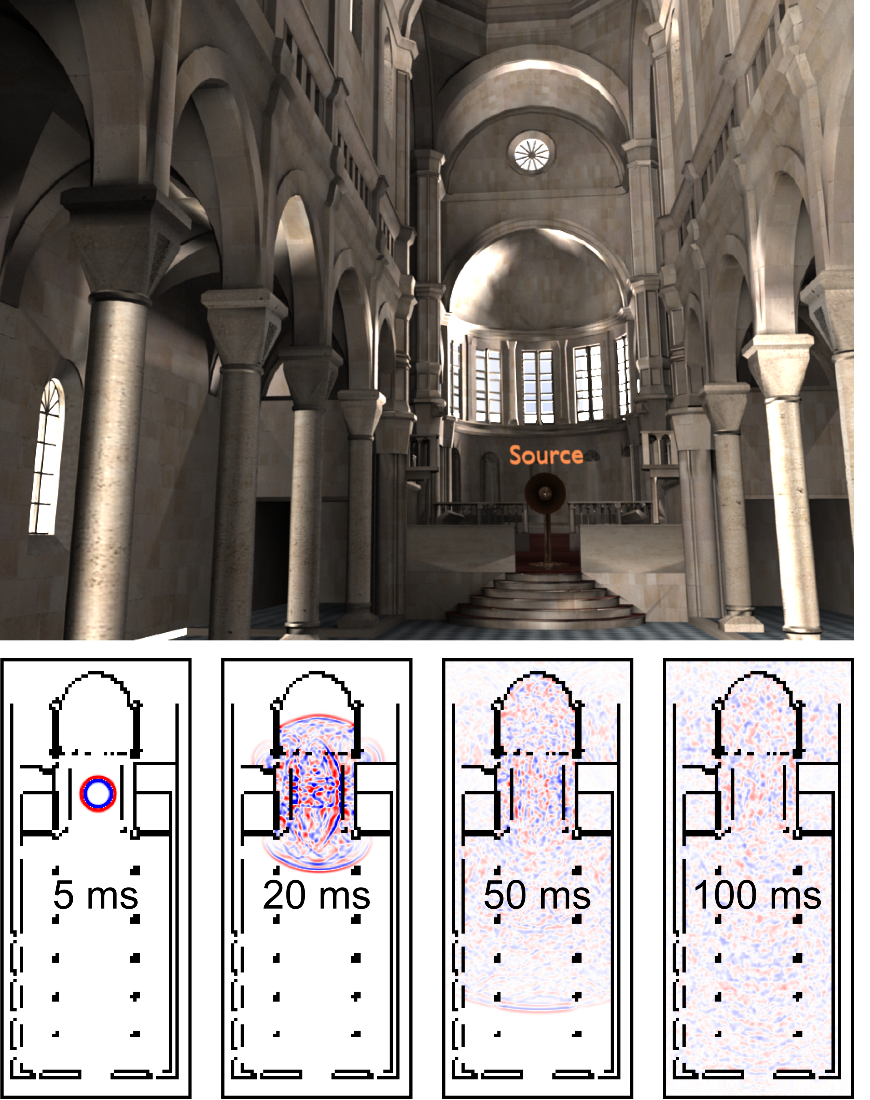

This paper presents a precomputed wave propagation technique that is immediately practical for games and VR. We demonstrate convincing spatially-varying effects in complex scenes including occlusion/obstruction and reverberation. The technique simultaneously reduces the memory and signal processing computation by orders of magnitude compared to prior wave-based approaches. The key observation is that while raw acoustic fields are quite chaotic in complex scenes and depend sensitively on source and listener location, perceptual parameters derived from these fields, such as loudness or reverberation time, are far smoother, and thus amenable to efficient representation.

This paper provides the framework underlying Triton.

Sound Synthesis for Impact Sounds in Video Games

Shipped in Crackdown 2 (with Guy Whitmore and Kristofor Mellroth at Microsoft Game Studios)

Brandon Lloyd, Nikunj Raghuvanshi, Naga K. Govindaraju, ACM Symposium on Interactive 3D Graphics and Games (I3D), 2011

Video games typically store recordings of many variations of a sound event to avoid repetitiveness, such as multiple footstep sounds for a walking animation. We present a technique that can produce unlimited variations on an impact sound while usually costing about the same memory as a single clip. The main idea is to use an analysis-synthesis approach: a single audio clip is used to extract the resonant mode frequencies of the object and their time-decay, along with a fixed residual signal in time domain. The modal model is then amenable to on-the-fly variation and re-synthesis.

Precomputed Wave Simulation for Real-Time Sound Propagation of Dynamic Sources in Complex Scenes

Nikunj Raghuvanshi, John Snyder, Ravish Mehra, Ming C. Lin, and Naga K. Govindaraju, ACM Transactions on Graphics (SIGGRAPH), 29(3), July 2010

This paper presents the first technique for precomputed wave propagation on complex, 3D game scenes. It utilizes the ARD wave solver to compute acoustic responses for a large set of potential source and listener locations in the scene. Each response is represented with the time and amplitude of arrival of multiple wavefronts, along with a residual frequency trend. Our system demonstrates realistic, wave-based acoustic effects in real time, including diffraction low-passing behind obstructions, sound focusing, hollow reverberation in empty rooms, sound diffusion in fully-furnished rooms, and realistic late reverberation.

Efficient and Accurate Sound Propagation using Adaptive Rectangular Decomposition (ARD)

Nikunj Raghuvanshi, Rahul Narain and Ming C. Lin, IEEE Transactions on Visualization and Computer Graphics(TVCG), 15(5), 2009

We present a technique which relies on an adaptive rectangular decomposition of 3D scenes to enable efficient and accurate simulation of sound propagation in complex virtual environments. It exploits the known analytical solution of the Wave Equation in rectangular domains, allowing the field within each rectangular spatial partition to be time-stepped without incurring numerical errors. The spatial partitions communicate using finite-difference-like linear operators, that do incur numerical error as weak artificial reflections. The use of analytic solutions allows this technique to provide reasonable accuracy at low spatial resolutions close to the Nyquist limit.

Interactive Sound Synthesis for Large Scale Virtual Environments

Nikunj Raghuvanshi and Ming C. Lin, ACM Symposium of Interactive 3D Graphics and Games (I3D), 2006

We present various perceptually-based optimizations for modal sound synthesis that allow scalable synthesis for virtual scenes with hundreds of sounding objects.

Publications [click paper title for PDF]

-

Adaptive Sampling for Sound Propagation

Chakravarty R. Alla Chaitanya, John M. Snyder, Keith Godin, Derek Nowrouzezahrai, Nikunj Raghuvanshi

IEEE Transactions on Visualization and Computer Graphics (IEEE VR 2019), 25(4), April 2019 (to appear) -

Ambient Sound Propagation

Zechen Zhang, Nikunj Raghuvanshi, John Snyder, Steve Marschner

ACM Transactions on Graphics (SIGGRAPH Asia 2018), 37(6), December 2018 -

Parametric Directional Coding for Precomputed Sound Propagation

Nikunj Raghuvanshi, John Snyder

ACM Transactions on Graphics (SIGGRAPH), August 2018 -

Wave Acoustics in a Mixed Reality Shell

Keith W. Godin, Ryan Rohrer, John Snyder, Nikunj Raghuvanshi

AES International Conference on Audio for Virtual and Augmented Reality (AVAR), August 2018 (to appear) -

Towards real-time two-dimensional wave propagation for articulatory speech synthesis

Victor Zappi, Arvind Vasuvedan, Andrew Allen, Nikunj Raghuvanshi, Sidney Fels

Proceedings of Meetings on Acoustics, 2016 -

Aerophones in Flatland: Interactive Wave Simulation of Wind Instruments

Andrew Allen, Nikunj Raghuvanshi

ACM Transactions on Graphics (SIGGRAPH), August 2015 -

Parametric Wave Field Coding for Precomputed Sound Propagation

Nikunj Raghuvanshi, John Snyder

ACM Transactions on Graphics (SIGGRAPH 2014), August 2014 -

RoomAlive: Magical Experiences Enabled by Scalable, Adaptive Projector-Camera Units

Brett Jones, Rajinder Sodhi, Michael Murdock, Ravish Mehra, Hrvoje Benko, Andrew Wilson, Eyal Ofek, Blair MacIntyre, Nikunj Raghuvanshi, Lior Shapira

ACM Symposium on User Interface Software and Technology (UIST), 2014 -

Acoustic pulse propagation in an urban environment using a three-dimensional numerical simulation

Ravish Mehra, Nikunj Raghuvanshi, Anish Chandak, Donald G. Albert and D. Keith Wilson, Dinesh Manocha

The Journal of the Acoustical Society of America, 2014 -

Wave-Based Sound Propagation in Large Open Scenes using an Equivalent Source Formulation

Ravish Mehra, Nikunj Raghuvanshi, Lakulish Antani, Anish Chandak, Sean Curtis, Dinesh Manocha

ACM Transactions on Graphics, 2013 -

An Efficient GPU-based Time Domain Solver for the Acoustic Wave Equation

Ravish Mehra, Nikunj Raghuvanshi, Lauri Savioja, Ming Lin, Dinesh Manocha

Journal of Applied acoustics (Elsevier), 2012 -

Sound synthesis for Impact Sounds in Video Games

D. Brandon Lloyd, Nikunj Raghuvanshi, and Naga K. Govindaraju

ACM Symposium on Interactive 3D Graphics and Games (I3D), 2011 -

Precomputed Wave Simulation for Real-Time Sound Propagation of Dynamic Sources in Complex Scenes

Nikunj Raghuvanshi, John Synder, Ravish Mehra, Ming C. Lin, and Naga K. Govindaraju

ACM Transactions on Graphics (SIGGRAPH), 2010 -

Interactive Physically-based Sound Simulation

Nikunj Raghuvanshi

PhD Thesis, Department of Computer Science, UNC Chapel Hill, 2010 -

Efficient Numerical Acoustic Simulation on Graphics Processors using Adaptive Rectangular Decomposition

Nikunj Raghuvanshi, Brandon Lloyd, Naga K. Govindaraju, and Ming C. Lin

European Acoustics Association Symposium on Auralization, 2009 -

Efficient and Accurate Sound Propagation using Adaptive Rectangular Decomposition

Nikunj Raghuvanshi, Rahul Narain and Ming C. Lin

IEEE Transactions on Visualization and Computer Graphics, 2009 -

Accelerated Wave-based Acoustic Simulation

Nikunj Raghuvanshi, Nico Galoppo and Ming C. Lin

ACM Solid and Physical Modeling Symposium, 2008 -

Real-Time Sound Synthesis and Propagation for Games

Nikunj Raghuvanshi, Christian Lauterbach, Anish Chandak, Dinesh Manocha, and Ming C. Lin

Communications of the ACM, 2007 -

Physically Based Sound Synthesis for Large-Scale Virtual Environments

Nikunj Raghuvanshiand Ming C. Lin

IEEE Computer Graphics and Applications, 2007 -

Interactive Sound Synthesis for Large Scale Environments

Nikunj Raghuvanshi and Ming C. Lin

Proceedings of the ACM Symposium on Interactive 3D Graphics and Games (I3D), 2006 -

A Cache-Efficient Sorting Algorithm for Database and Data Mining Computations using Graphics Processors

Naga K. Govindaraju, Nikunj Raghuvanshi, Michael Henson, and Dinesh Manocha

Department of Computer Science, UNC Chapel Hill, Tech. Report, 2005 -

Fast and Approximate Stream Mining of Quantiles and Frequencies Using Graphics Processors

Naga K. Govindaraju,Nikunj Raghuvanshi, and Dinesh Manocha

Proceedings of ACM SIGMOD, 2005 -

Raytraced rendering using t-buffer and shadow-buffer

Nikunj Raghuvanshi and Sanjay G. Dhande

International Journal of Information Technology, 2004

Patents

Granted

-

Virtually Visualizing Energy, 2018

Jaron Lanier, Kishore Rathinavel, Nikunj Raghuvanshi

US Patent #9,922,463 -

Enhanced spatial impression for home audio, 2017

Nikunj Raghuvanshi, Daniel Morris, Andrew Wilson, Yong Rui, Desney Tan, Jeannette Wing

US Patent #9,560,445 -

Dynamic calibration of an audio system, 2017

Desney Tan, Daniel Morris, Andrew Wilson, Yong Rui, Nikunj Raghuvanshi, Jeannette Wing

US Patent #9,729,984 -

Parametric Wave Field Coding For Real-Time Sound Propagation for Dynamic Sources, 2016

Nikunj Raghuvanshi, John Snyder

US Patent #9,510,125 -

Real-Time Sound Propagation For Dynamic Sources, 2016

Nikunj Raghuvanshi, John Snyder, Ming C. Lin, Naga Govindaraju

US Patent #9,432,790

Applications

- Directional Propagation, MS404528-US-PSP, disclosure filed May 15, 2018

- Privacy Preserving Sensor Apparatus, MS#339904.02, filed on January 18, 2014

- Structural Element For Sound Field Estimation And Production, MS#339901.01, filed on January 9, 2014

- Adapting Audio Based Upon Detected Environmental Acoustics, MS#339900.01, filed on December 20, 2013

Talks & abstracts (excluding first-authored paper presentations)

- [Invited] Triton: Practical pre-computed sound propagation for games and virtual reality, Nikunj Raghuvanshi, John Tennant, John Snyder, The Journal of the Acoustical Society of America 141 (5), 2017

- 'Gears of War 4', Project Triton: Pre-Computed Environmental Wave Acoustics, jointly with John Tennant, Game Developers Conference, Mar 2016. [Slide deck (600MB)]

- [Invited] Numerical wave simulation for interactive audio-visual applications, Nikunj Raghuvanshi, Andrew Allen, John Snyder, The Journal of the Acoustical Society of America 139 (4), 2016

- [Invited] Adaptive rectangular decomposition: A spectral, domain-decomposition approach for fast wave solution on complex scenes, Nikunj Raghuvanshi, Ravish Mehra, Dinesh Manocha, Ming C. Lin, The Journal of the Acoustical Society of America 132 (3), 2012

- [Invited] Real-time wave acoustics for games, Meeting of Audio Engineering Society (AES), Oct 2011

- Real‐time auralization of wave simulation in complex three‐dimensional acoustic spaces, Meeting of the Acoustical Society of America, May 2011

- Sound Synthesis in Crackdown2, Microsoft Gamefest, 2011

- [Rated among top talks at GDC 2011] Sound Synthesis in Crackdown2 and Wave Acoustics for Games, Game Developers Conference (GDC), 2011

- Interactive Physically-based Sound Synthesis and Propagation, Microsoft Research, 2009

- Real-time Physically-based Sound Synthesis for Games, Game Developers Conference (GDC), 2007

- Perceptual Optimizations for Interactive Sound Synthesis in Virtual Environments, as part of course on “Exploiting Perception in High-Fidelity Virtual Environments, ACM SIGGRAPH, 2006

Professional service

- Program Committee Member Technical Papers, ACM SIGGRAPH 2017

- Program Committee Member Technical Papers, ACM Symposium on Computer Animation (SCA) 2017

- Program Committee Member Technical Papers, ACM SIGGRAPH 2016

- Program Committee Member Technical Papers, ACM Symposium on Computer Animation (SCA) 2016

-

Reviewer [Graphics] ACM SIGGRAPH, SIGGRAPH Asia, ACM Symposium on Computer Animation, ACM Transactions on Graphics, Eurographics, Computer Graphics Forum

-

Reviewer [Acoustics] Journal of Acoustical Society of America (JASA), IEEE Transactions on Audio, Speech and Language Processing (TASL), Computer Music Journal, ASME Journal of Vibration and Acoustics

-

Reviewer [HCI/AR] Conference on Human Factors in Computing Systems (CHI), User Interface Software and Technology (UIST), IEEE International Symposium on Mixed and Augmented Reality (ISMAR)